OpenText™ Documentum™ customers with large compliant repositories can face performance challenges during Workorder processing. To alleviate this issue, OpenText Documentum Records Policy Services has a WorkOrder framework to asynchronously process operations in the background. Using multiple background processing threads enables a higher throughput than is possible in the primary request processing thread.

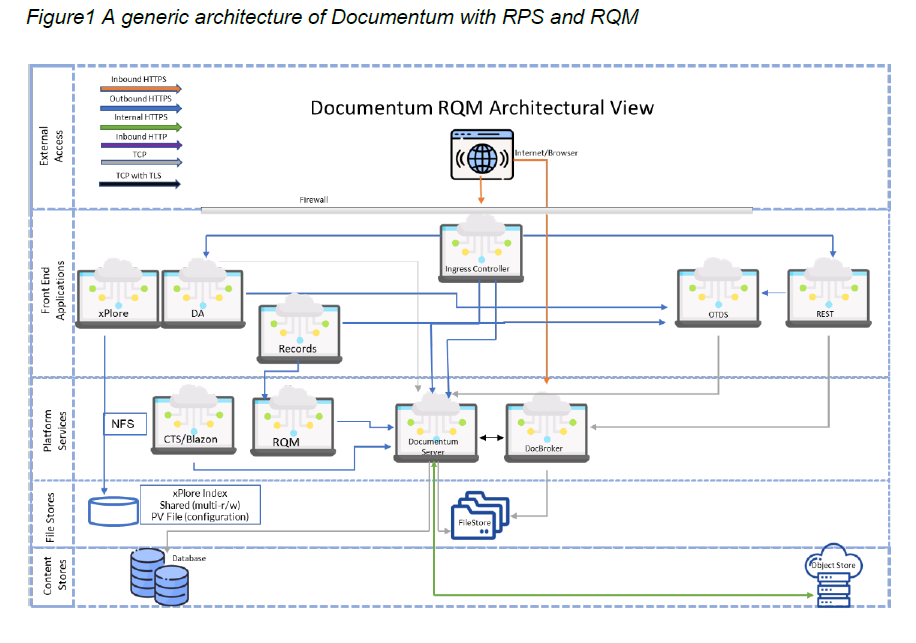

To use this functionality, the framework requires a queueing mechanism to allow to the operations to be executed. This component is called Records Queue Manager (RQM). RQM is built upon the asynchronous processing framework. This whitepaper describes the RQM architecture and tuning guidelines to achieve an optimal rate for applying retention.

Audience

The document is intended for a technical audience that is planning an implementation

of OpenText Documentum Retention Policy Services products. OpenText recommends consulting with OpenText Professional Services, who can assist with the specific details of individual implementation architectures. IT administrators and solution integrators should review this document and make note of any performance characteristics and capacity considerations for RQM instances.

Introduction

OpenText Documentum® Retention Policy Services (RPS) enables organizations to easily configure content retention and disposition policies behind the scenes to meet business and compliance goals. These policies can be easily and automatically applied and enforced at the platform level across all content types, enterprise-wide without any action from end users. Retention Policy Services applies policies automatically to minimize end-user involvement and maximize policy adherence. Using RPS, you can qualify a record for promotion within RPS lifecycles as it moves from one phase to the next. You can also prevent a record from reaching disposition if necessary. For example, if you are assigned to the proper role (Retention Manager or Compliance Officer), you can apply a retention markup to a record, such as a hold, and prevent its disposition until the hold is removed.

Documentum RPS consists of a major component called Records Queue Management (RQM). RQM is a scalability framework that allows for RPS and Records operations on large numbers of objects to be executed asynchronously. RQM wraps RPS tasks as work orders to be executed as a custom task. Each work order is a persistent object in the Documentum repository and has an attribute and content information that contains status information about what the work order is doing.

RQM Architecture

The Records Queue Manager (RQM) is a separate processor that executes the work orders and can be deployed on multiple machines. It is a multi-threaded process that can execute multiple work orders at the same time. The framework is designed to allow for work orders to be independent of each other thus allowing for any work order to be executed by multiple threads and multiple processes.

Pieces of the Framework

The WorkOrder scalability framework used by RQM gives the capability to process RPS operations on large numbers of objects asynchronously. The benefits are:

- The framework gives Records clients and the API the ability to put RPS and Records operations on large numbers of objects.

- This allows for the user to gain control back and do other things while the framework process the operation.

- The user can also start another operation and have that work at the same time as the first operation.

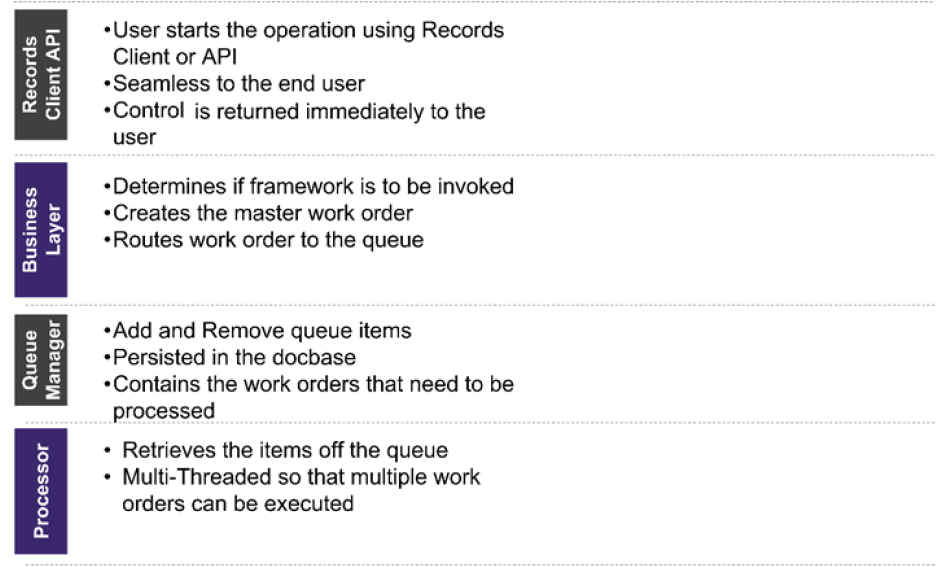

The different layers of the framework are as follows:

RQM is designed as per the below functional building blocks:

- A Task to execute the work order.

- Profile to describe the task when adding a message to the queue.

- An API to put the message as a “dm_queue” with an associated custom task.

- The message is of type “dmi_queue_item”. Client applications that retrieve the message from the queue, create a session and execute the task.

- The task is where the object ID of the work order is located and the business logic to execute the TBO on the work order. RQM services execute a standard method on the task that will in turn:

- Load up the work order.

- Execute the ProcessItems method on the work order.

RQM can exists independently on a separate machine or virtualized image/container. RQM allows for more than one process to service a queue. This allows for load balancing as more than one process to execute queue messages.

Work Orders

Each work order is a persistent object in the docbase, and it has attribute and content information that contains status information about what the work order is doing.

The work order contains a list of objects and the operation to execute on the objects.

For example, a work order has a list of objects to apply a retention policy to them. The work order will execute this operation and update the status information on the work order in the docbase as it progresses and does a final update once it has completed the applying the policy to the documents.

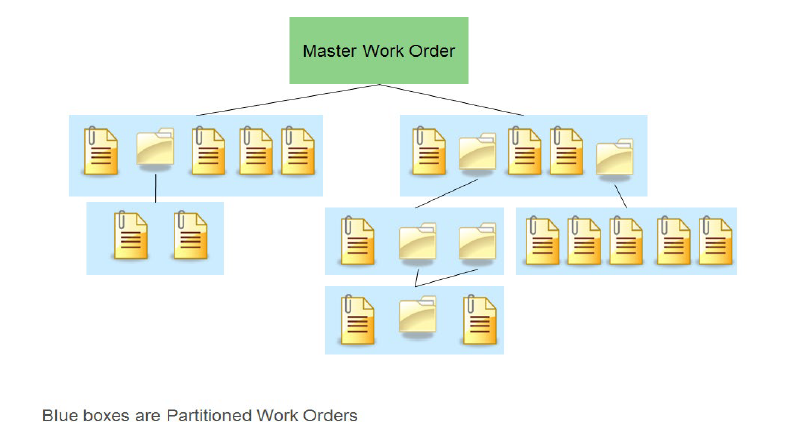

The different work orders determine what kind of the of operation it does. Master (start the process), Reference (deal with containers) and Partitioned (does the work of the operation).

Master Work Orders

Master work orders start the process. They will contain the full query or list of objects for the entire operation. Their job is to break up the query or object list into smaller chucks that can be worked on.

Reference Work Orders

Reference work orders are usually used to represent containers. For example, if a work order was working on a list of objects and came across a folder with a million objects in it. It would create a reference work of for that folder and then route it on the queue. Another process will take that reference work order and execute it and it will break that million-object folder into smaller work orders.

Partitioned Work Orders

The partitioned work order is the lowest level work order and will perform the work of the operation on the objects. It will contain a fixed number of objects to work on, either through a query with a range of object id’s or list. The partitioned work order may create reference work orders if it comes across a container in its list of objects.

This is an example of a breakdown of what happens when the framework chunks up an operation. The master is first created, and it will contain a list of all the objects to process, in this example 10 objects (this is just to show the how the system works).

The master is broken up into 2 work orders, each with 5 objects each. They are both routed to the queue and then are started to be processed. In the first work order, the second object processed is a folder, so it will spawn a reference work and will contain a query to fetch all objects in this folder.

The reference work order is placed on the queue and then picked up by the next available processor. The second work order processes 2 folders, which will spawn 2 reference work orders, and these will also be placed on the queue.

The first two work orders are now finished and will update their status. The 3 reference work orders are now executing and will create a partitioned work order for each of the reference work order. The reference work orders are now done and complete their work.

The partitioned work orders now are routed and are picked up by a processor. The first and third work order completes their tasks and updates their status. They also indicate to their parent that they are done as well.

The second partitioned work order is executing and finds 2 folder and the system determines one 1 partitioned work order needs to be created. It creates the work order and then routes it to be executed. The Partitioned work order is executed and since the folder is empty, no additional work orders are created.

All work orders complete and update their status and their parents. The master will be the last to update when the 2 children report that they are done.

Object Types

- Master work order (dmc_rps_master_work_order)

o Overall information about a work order

o Input and output are stored as pages of content - Reference work order (dmc_rps_ref_work_order)

o Information about a folder/container being processed - Partitioned work order (dmc_rps_part_work_order)

o Information about a work order that has been partitioned (either the master or a reference work order) - Work Order Result (dmc_rps_work_order_result)

o Information about a particular item that was processed

o Used by work order item report - Work Order Configuration (dmc_rps_work_order_config)

o Configuration for the overall framework - Work Order Operation Configuration (dmc_rps_work_order_op_cfg)

o Defined per operation, used for thresholds and to register operations - Dmc_rps_last_transfer_rel

o Used by Last Transferred Item list tab on Transfer set (NARA, DoD)

o One per item that was previously transferred (per disposition bundle) - Dmc_rps_transfer_rel

o Used by the Manifest list tab on a Transfer set (Dod)

o One per item that was disposed (per transfer strategy) - Dmc_rps_unsucc_child_disp

o A temporary relation created during disposition processing

o Indicates that a child could not be successfully disposed

o Disposition configuration object has more attributes

o Export location

o Moved job parameters to this object

o Able to specify rollover policies for both linked and individual

Basic Queue Operations

The queue can put a message on the queue and an associated custom task and a profile with the message. Within the task, there is the ability to associate an object ID of the work order. Also, there is an API to retrieve messages from the queue and to identify which task is associated to the queue message. The queue can sign off on a message to a process and only give the message to only one process.

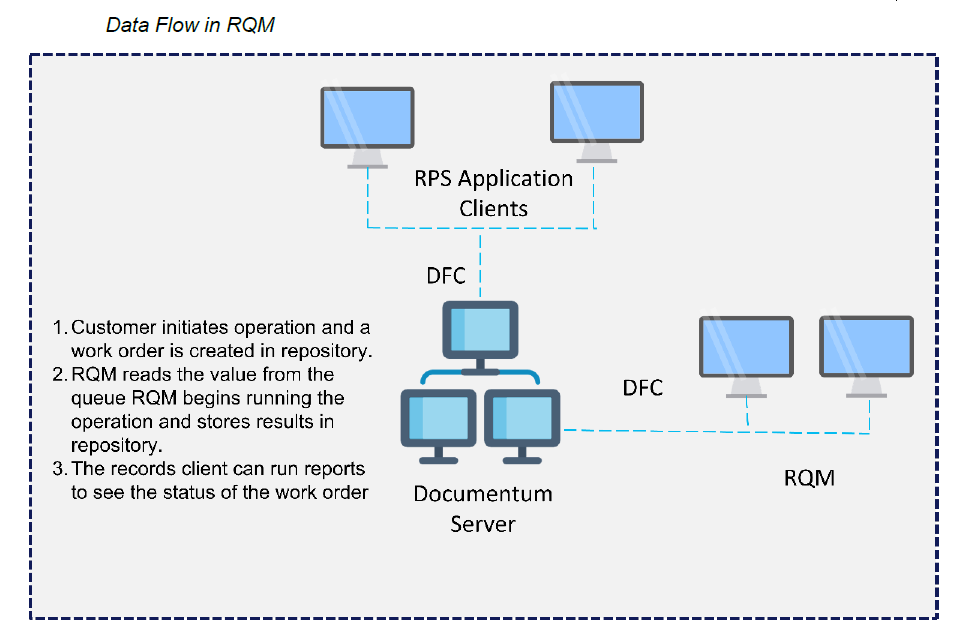

Data Flow

RQM can execute a custom task associated to the message within a docbase session and thread. The process is independent from the docbase and can run on any server machine (including virtualized). The process can be configured to utilize a fixed number of threads, where each thread can process one message. The process can also service more than one queue in separate docbases. Upon startup, the process will connect to each configured docbase and create a session manager for each docbase.

The Process runs as follows (for each docbase):

- Polls the queue for messages to execute (if there is a free thread).

- If a queue item is found, it will be assigned to the process.

- Depending on the configuration, more queue items may be assigned to the process than there are threads available (load balancing algorithm).

- The process creates a thread for the message

- The process creates a docbase session within the thread

- The RQM Task is then executed that is associated to the message

- The Task will then execute the TBO method on the work order

- Once the method finishes, the session will be disconnected, and the thread is now available to another message.

There are configuration options for:

- Number of threads.

- Number of messages to sign off on at any time.

- Interval for polling the queue for more messages.

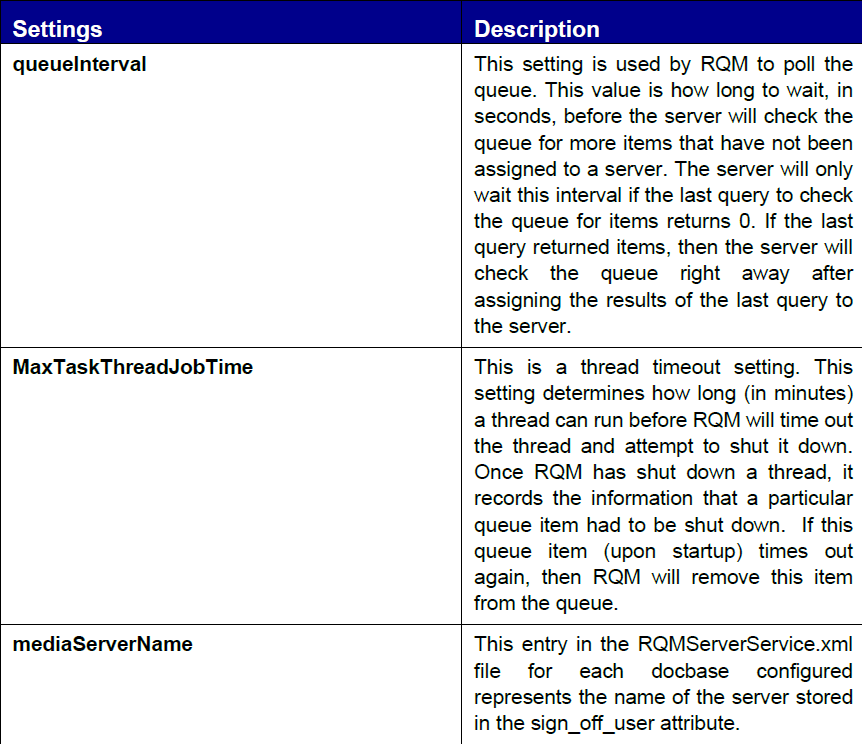

- There is another setting that is used by RQM to poll the queue:

- queueInterval : This value is how long to wait, in seconds, before the server will check the queue for more items that have not been assigned to a server. The server will only wait this interval if the last query to check the queue for items returns 0. If the last query returned items, then the server will check the queue right away after assigning the results of the last query to the server.

Use Cases

…

Reference Configuration Settings for RQM

Many of Documentum customers already have billion object repositories with content growing at an unprecedented rate. Retention policies go through changes and require mass volumes updates with updates in the policies. In such scenarios, multiple RQM services are required to process documents. RQM is easily scalable and additional RQM servers can be set up to handle more workloads. With RQM, the processor-intensive requirements have been shifted from the RPS application server to any number of dedicated RQM servers. We should also look at the number of queue items that we are creating. To optimally use all 4 or 5 servers, we should at least make sure that the number of queue items that are available is enough to keep all threads at their maxThreads.

Load Balancing

RQM allows for multiple threads and multiple processes to execute operations.

For an RQM server, it makes sense to try and get as many threads running concurrently on the server as possible. There will be a point, however, when performance for each thread will diminish as more threads are added. The key is to find where, for each server, the maximum number of threads running is optimal for performance. Experimental research suggests that an optimal approach is 1 thread per processor (core per dedicated CPU).

Additionally, in a multi-server environment, load-balancing can occur for processes waiting to be run. However, load balancing would not matter as there is no degradation of performance when the maximum number of threads are running on a server. It would matter if by having the load spread evenly over multiple servers (i.e., each server is not running at maximum number of threads) would result in greater performance.

For Example:

There are 150 queue items to be processed. There are 2 servers configured and each is configured for maximum 100 threads. If server 1 running 100 threads and server 2 running 50 threads has no benefit in performance versus server 1 is running 75 threads and server 2 is running 75 threads, then trying to configure the system to load balancing for equal distribution of processes across server would not achieve any increase in performance.

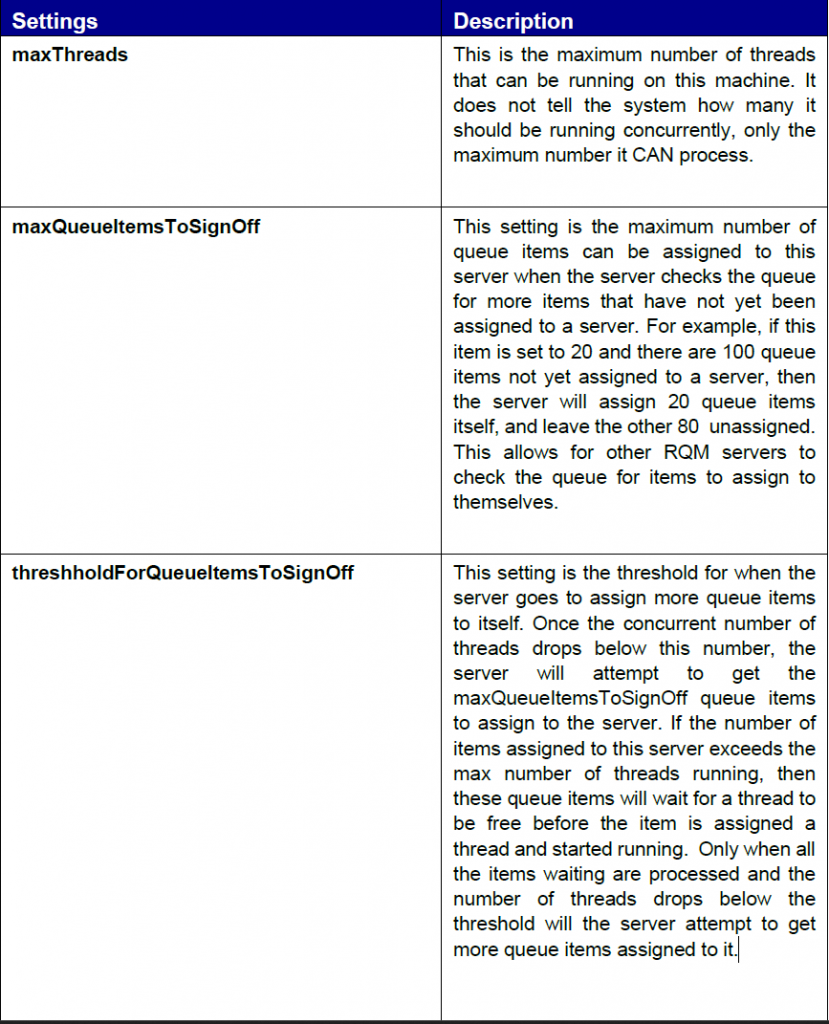

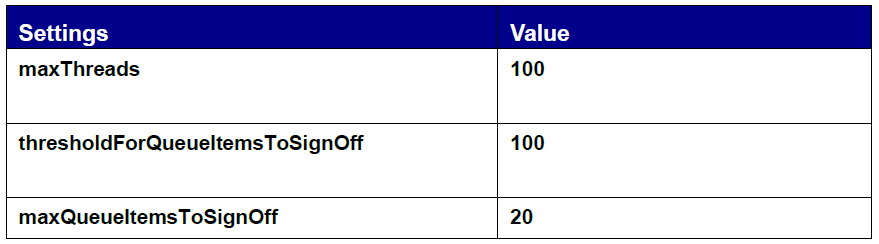

There are three settings on RQM that determine how many threads can run, how many are running concurrently and how many to get in a new block are:

maxThreads

maxQueueItemsToSignOff

threshholdForQueueItemsToSignOff

To “sign off” a queue item means to assign a queue item to a server. It does not mean that a queue item has been assigned a thread to start processing. It just means that it this queue item is now waiting for a free thread on that server to start processing. As soon as a free thread is available then the queue item will start processing.

The configuration of these settings attempt to allow for multiple servers to load balance the processing of the queue. It tries to not allow a single server from trying to sign off on all the queue items and thus not allowing other servers the ability to process queue items.

Single RQM Server with example and flow

There are 140 queue items to be processed; however, they do not get added to the queue all at once. They are added as the other process is being executed.

- RQM queries the queue for items that have not been assigned to a server and finds 25 items that have not been assigned. Since this is less than the

maxQueueItemsToSignOff, the server assigns all 25 to the server - Since the number of running threads in less that the threshholdForQueueItemsToSignOff, the server starts processing 25 threads.

- RQM queries the queue for items that have not been assigned to a server and finds 40 items that have not been assigned. Since this is equal to the

maxQueueItemsToSignOff, the server assigns all 40 to the server. - 5 threads have completed so now there are 60 running threads.

- RQM queries the queue for items that have not been assigned to a server and finds 50 items that have not been assigned. Since this is more than the

maxQueueItemsToSignOff, the server assigns only 40 to the server, leaving 10 on the queue - 10 more threads have completed so now there are 90 running threads (i.e., 90 signed off). Since the number of running threads is greater than the

threshholdForQueueItemsToSignOff, so the server will wait until 11 threads complete before attempting to sign off the next batch. - 11 threads complete.

- RQM queries the queue for items that have not been assigned to a server and finds 35 items that have not been assigned (all items left, the 10 from the last query and the last 25). Since this is less than the maxQueueItemsToSignOff, the server assigns all 35 to the server.

- We now have 114 queue items assigned to the server. 100 threads are running (max threads). As a thread completes, an item immediately comes off the waiting list and starts executing. This process repeats until there are no more waiting items on the server. The process finishes when all threads complete.

Initial settings for the RQM server are thresholdForQueueItemsToSignOff = maxThreads and maxQueueItemsToSignOff = 20. What this does is to have RQM keep the number of threads being processed as close to maxThreads as possible. The reason for having the signoff at 20 is to not allow one RQM server to assign all the queue items to it. The most that will be assigned to any one server is maxThreads + maxQueueItemsToSignOff.

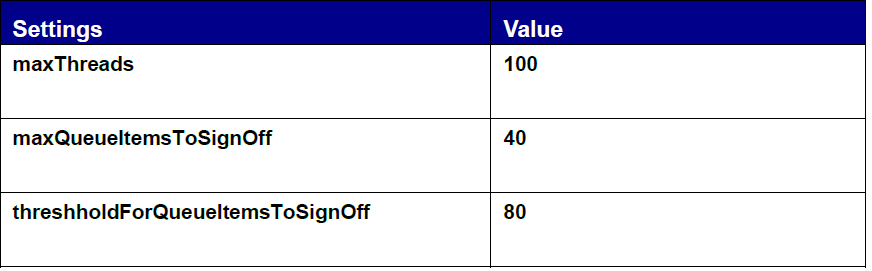

Multiple RQM Servers

We should also look at the number of queue items that we are creating. To optimally use all 4 or 5 servers, we should at least make sure that the number of queue items that are available is enough to keep all threads at their maxThreads.

4 servers each set to:

Each server could potentially have 119 items assigned to it at any one time. To keep all of them busy up to their max threads, we need 476 queue items. mediaServerName is an entry in the CTSServerService.xml file for each docbase configured and represents the name of the server stored in the sign_off_user attribute.

Startup/Recovery

When RQM starts up, it will check if there are queue items that have been assigned to it (i.e., signed off) and if any exist, RQM will move into Recovery mode. It does this because it thinks that it was interrupted, and it should finish the currently assigned queue items before it attempts get new ones.

It checks for queue items where the sign_off_user is itself.

Once RQM enters Recovery mode, it will process each queue item assigned to it one at a time.

It does not matter which RQM server executes the work order, so to prevent a RQM server from switching into Recovery mode upon startup, the sign_off_user attribute for dmi_queue_item entries for the server being started up should be cleared. This will allow for the RQM server to start signing off on queue items right away when it starts up.

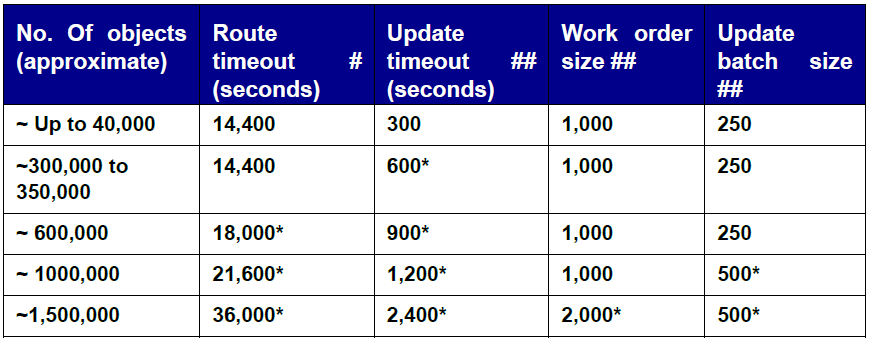

Work Order Configuration Settings

APPLY_REMOVE_RECORDS_POLICY operation

Refer to the below table for the various work order configuration settings for different sets of data. These values are just recommendations and based on internal testing that was performed with one RQM, one Documentum Server and can be altered based on end user system performance.

- Modified setting

Work order Configuration (dmc_rps_work_order_config)

Work order Operations Configuration (dmc_rps_work_order_op_cfg)

…………………

Persistence Model

All messages are stored as dmi_queue_item on dm_queue in the docbase. If a process goes down, the information is saved, and the process can continue where it left off. If a task was being executed when the process goes down, the work order would have to be re-routed to the queue to be re-executed.

Sample Configuration

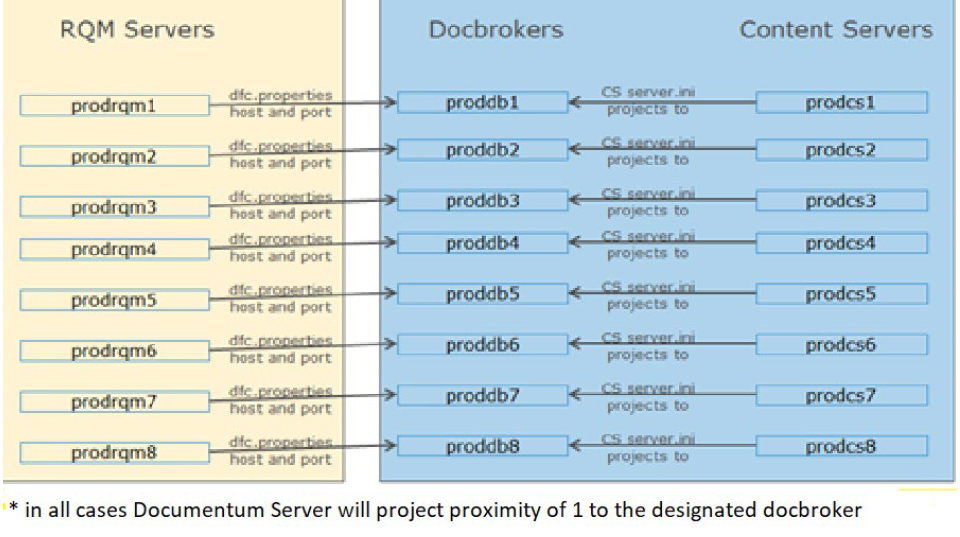

For optimal configuration and performance for RQM, testing has found that there is a 1-1 relationship between and RQM instance and a Documentum Server instance, all pointing to the same docbase instance.

Also, using a 64-bit JVM will achieve better performance as additional heap memory can be allocated to each JVM instance.

Configuration :

- maxThreads = 30

- maxQueueItemsToSignOff = 20

- threshholdForQueueItemsToSignOff = 20

- JVMHeap = 2048

8 Documentum Server Instances, 8 Docbrokers and 8 RQM Servers

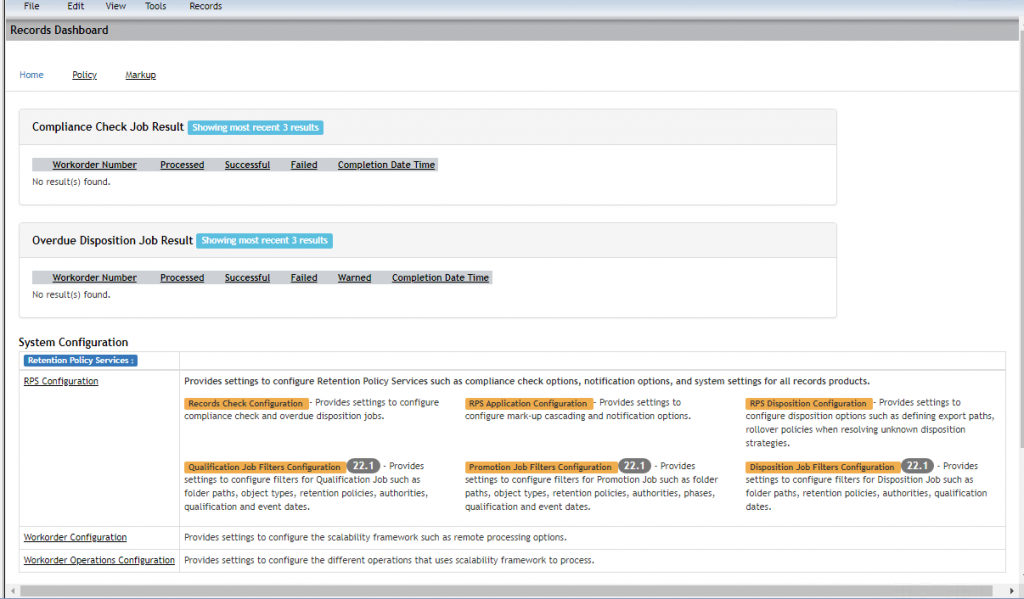

Records Dashboard: